Way back when I started this blog, I wrote my definition of testing. I've revisited it many times, and sometimes expanded upon it.

It's an important thing for testers to revisit their definition of "what testing is about". Throughout this blog I have explored and revisited many facets of testing, and on the train today, I felt compelled to revisit this once again.

This is my current take on testing - it might change tomorrow, or next year, but it will change. It's not fallible, but not meant to be. It's designed to be a vision, that is readable and engaging.

The Mission Of Testing

The mission of testing is to seek out unexpected behaviour when a system is placed within differing scenarios, and to make both the quest and it's findings known to those who make decisions.

The prudent tester knows that as they are exploring the unknown, they can never know how much "unexpected behaviour" is left to discover, but can only estimate the risk based on what they know to work (or otherwise).

The Determination Of Scenario

The imaginative tester uses their skill to derive scenarios for testing from their understanding of the system through methods including - but not limited to - requirement, business value, standard expected behaviour or simple curiosity.

The Management Of Testing

The experienced tester knows that the time allocated for testing is finite whilst variations and imagination are almost infinite, and thus there are always more scenarios they can imagine than the time available.

Hence, they will always prioritise first the most compelling scenarios which are more representative or where there is great expected risk.

But the wisest of testers will make these choices transparent to all, both peer and those with the power to make decisions, and will encourage ownership and debate beyond those who call themselves testers. They will seek to incorporate such direction where it is valid, but champion and mentor when they feel it is inappropriate.

The Execution Of Testing

As the mission of testing is seeking the unexpected, and as they are trying to fit the infinite variation of scenario into a finite time-box, the visionary tester knows it is more important to use their time to explore as many scenarios as possible, and will seek to use any documentation for this activity which is as lightweight and unobtrusive in this pursuit as possible.

Thursday, August 29, 2013

Monday, August 26, 2013

Insecurity 105 - Growth through role play

The Art of Role Play

We've all been in a situation at work, where we've had a conversation that's gone really bad, and it churns over and over in our mind for days and weeks afterwards as we sketch out all the things we would have liked to say.

In my book, The Elf Who Learned How To Test, I put one of the elves – Magnus – under duress in a conversation with management which goes badly. The conversation troubles him considerably, and he ends up revisiting it a lot. So much so that when he's in a similar position later on, he's thought through the conversation, and handles it much better.

Experience is a great teacher – we are put into scenarios which don't go brilliantly, and we learn to handle them better though internal retrospection and trying things differently. Hopefully there are people who can guide us and we can learn from, and we're never put into a career-ending position. But it's always a risk.

What if there was a way to get the experience, without the career-ending jeopardy? Oh wait … there is …welcome to role play.

Here when I talk about role play, I'm talking about the acting technique over the Dungeons and Dragons game variety when you use dice and magic spells.

I spoke a little about acting techniques when I talked about my friend Michael Powell, and our drama group, A Flash Of Inspiration. The core idea of role play is that you're put into a scenario, and have to act out your responses. But the scenario is played out with a coach or mentor in a safe, private environment, and you get to analyse your responses.

As such, role play is a great way to get experience of scenarios, and explore some of the things we'd say when under duress. However a lot of people have difficulty getting into the spirit of it – it feels (even to actors such as myself) a little silly and awkward at first. Last week at STANZ I got to join in with Matt Heusser in an exercise with an element of role-play, and got a little too into character – but it was interesting to see how my test team would respond to questions and scenarios from me as a “head of software development”.

Here are a few pieces I've learned when I need to role play to prepare someone …

Keep it safe, keep it secret

Book a room on another floor if possible that no-one can see into and wonder “what are they up to?” - you don't need distractions. What happens in the role play session needs to stay in the role play session – it needs a level of trust.

Differentiating between you talking as a coach and talking as a character

Watching Matt at STANZ, he would often have to make a point of saying “I'm coming out of character to tell you this ...”. It helps to have a very visual distinction. I sometimes like to wear a cap as a mentor, and take it off to play the character, so it's very clear who I'm talking as at any point in time. It also means the coachee in the role play can ask you to put the coach cap back on if they need help.

This may seem all very wishy-washy, but it helps for the person you're coaching to know who you are playing at any given time.

Give the coachee options

Much like Who Wants To Be A Millionaire, give the coachee a couple of lifelines. These help if it's getting a bit too intense, and they need help. Remember role playing has to happen in a safe environment – it is without doubt the most important thing. The coachee needs to feel stretched and a bit out of their comfort zone, but feeling actual duress can be unhelpful.

A couple of standard ones I use,

Debrief

Don't just do the role play exercise – go through what you've done together. Pick out the bits they did well, point out any glaring mistakes, and explore possible better alternatives to handle what was thrown at them.

Does it help?

It certainly does, the more you practice certain scenarios, the more you're able to give sensible answers under duress. Through similar role play with test mentors I've had, I learned to handle questions about defects from management better, and it became the Teatime With Testers article, “Understanding the defect mindset”. Indeed some of those questions are excellent to use in a first role play if you so desire.

Likewise, in my Rapid Software Testing course, I got to work through an amazing role play with James Bach with tested and stretched me considerably. The more you practise such scenarios, the more natural and fluid your answers will be, and the less you'll be heading home going “I wish I'd said ...”.

We've all been in a situation at work, where we've had a conversation that's gone really bad, and it churns over and over in our mind for days and weeks afterwards as we sketch out all the things we would have liked to say.

In my book, The Elf Who Learned How To Test, I put one of the elves – Magnus – under duress in a conversation with management which goes badly. The conversation troubles him considerably, and he ends up revisiting it a lot. So much so that when he's in a similar position later on, he's thought through the conversation, and handles it much better.

Experience is a great teacher – we are put into scenarios which don't go brilliantly, and we learn to handle them better though internal retrospection and trying things differently. Hopefully there are people who can guide us and we can learn from, and we're never put into a career-ending position. But it's always a risk.

What if there was a way to get the experience, without the career-ending jeopardy? Oh wait … there is …welcome to role play.

Here when I talk about role play, I'm talking about the acting technique over the Dungeons and Dragons game variety when you use dice and magic spells.

I spoke a little about acting techniques when I talked about my friend Michael Powell, and our drama group, A Flash Of Inspiration. The core idea of role play is that you're put into a scenario, and have to act out your responses. But the scenario is played out with a coach or mentor in a safe, private environment, and you get to analyse your responses.

As such, role play is a great way to get experience of scenarios, and explore some of the things we'd say when under duress. However a lot of people have difficulty getting into the spirit of it – it feels (even to actors such as myself) a little silly and awkward at first. Last week at STANZ I got to join in with Matt Heusser in an exercise with an element of role-play, and got a little too into character – but it was interesting to see how my test team would respond to questions and scenarios from me as a “head of software development”.

Here are a few pieces I've learned when I need to role play to prepare someone …

Keep it safe, keep it secret

Book a room on another floor if possible that no-one can see into and wonder “what are they up to?” - you don't need distractions. What happens in the role play session needs to stay in the role play session – it needs a level of trust.

Differentiating between you talking as a coach and talking as a character

Watching Matt at STANZ, he would often have to make a point of saying “I'm coming out of character to tell you this ...”. It helps to have a very visual distinction. I sometimes like to wear a cap as a mentor, and take it off to play the character, so it's very clear who I'm talking as at any point in time. It also means the coachee in the role play can ask you to put the coach cap back on if they need help.

This may seem all very wishy-washy, but it helps for the person you're coaching to know who you are playing at any given time.

Give the coachee options

Much like Who Wants To Be A Millionaire, give the coachee a couple of lifelines. These help if it's getting a bit too intense, and they need help. Remember role playing has to happen in a safe environment – it is without doubt the most important thing. The coachee needs to feel stretched and a bit out of their comfort zone, but feeling actual duress can be unhelpful.

A couple of standard ones I use,

- Timeout – simply that they want to pause for a moment because it's getting too intense.

- Cap on - asking me to put the coaches cap back on, and break out of character to give advice.

- Swapsies – to be used occasionally, but it allows the two of you to swap roles for a while, so they can see how you would react to such questioning

Debrief

Don't just do the role play exercise – go through what you've done together. Pick out the bits they did well, point out any glaring mistakes, and explore possible better alternatives to handle what was thrown at them.

Does it help?

It certainly does, the more you practice certain scenarios, the more you're able to give sensible answers under duress. Through similar role play with test mentors I've had, I learned to handle questions about defects from management better, and it became the Teatime With Testers article, “Understanding the defect mindset”. Indeed some of those questions are excellent to use in a first role play if you so desire.

Likewise, in my Rapid Software Testing course, I got to work through an amazing role play with James Bach with tested and stretched me considerably. The more you practise such scenarios, the more natural and fluid your answers will be, and the less you'll be heading home going “I wish I'd said ...”.

Thursday, August 15, 2013

ISTQB - is your name on the list?

Your name is also going on the list ...

I noticed today that the ANZTB put a list up here on their site of everyone who has ever taken and passed the ISTQB exam. I was a bit shocked by this - granted there are no other personal details - but still in this day and age, and in an industry where we should really respect the confidentiality of client data to the upmost degree, I was a little shocked.

I'm more shocked that a few names on it I recognise as testers I know from around Australasia who are quite vocal opponents of ISTQB certifications. And yet, alas, their names are displayed on a list, their very presence there seeming to lend weight and endorse these exams.

I actually raised this up with a couple of names on the list, who said when they were starting out in their career, ISTQB was the training they were provided, and they were too young to understand there was other training options for ISTQB. In fact for many, ISTQB (or ISEB in my day), was sold as the "only training you needed".

Look through the list and you will see names like Aaron Hodder, Brian Osman, David Greenlees, David Robinson, Katrina Edgar. I know many of these people, they took ISTQB, went to their testing jobs, and found what they learned often wasn't good enough to help them cope with the many real world challenges that were thrown at. Testing had to be more than following a paper model, it wasn't always an A, B, C or D choice, it required more imagination than that.

Many of these people have chosen to align themselves with the Context Driven School of Testing, a school which says there is no "one way" of doing testing, and to which I feel a lot of affinity. They have sought out mentors like James Bach and Michael Bolton, they have brought peer conference events like KWST to New Zealand, and WeTest to Wellington. All of this they've done because they're seeking more answers than the ISTQB syllabus can provide.

I emailed a couple of them, partly to pull their leg a bit (I'm a sensitive soul like this), but partly to say "do you know your name is displayed on the ANZTB for all to see associated with ISTQB?". A few were shocked.

Fortunately if you have strong feelings, and wish to have your name removed, you can request this by emailing, info@anztb.org

[I'm lucky myself they don't put up promotional lists for those of us who took the earlier ISEB qualification, and under the UK Data Protection Act, I'd actually argue that putting my name up would actually be illegal for the UK board!]

Wednesday, August 14, 2013

Insecurity 104 - the 360 review

When I was talking about feedback, I also touched briefly upon the idea of 360 feedbacks for testers, which Lisa Crispin asked me to expand a little bit about …

Of course in that article, we’re talking more about ad-hoc feedback, and the importance of giving it throughout the year. A 360 feedback is a much more structured and formal way of giving feedback, which complements ad-hoc, and can be a framework for working with a staff member to help develop them through mentoring.

Our jobs involve a lot of elements. What am I doing well? What could I do better? We can worry about these things, but without getting some form of feedback, we’re just guessing at these things. Maybe in some areas we are too harsh on ourselves, whilst in others we think we’re doing superb, when really we’re falling into a trap of overestimating our competence. Without some feedback, we’ll never know and worse still may be fretting about the wrong things.

It’s easy to see the process of feedback through review or appraisal as a negative and soul destroying piece of HR. And some companies do indeed wield it as such. But the process really needs to be a constructive one, which is focused on putting time and energy into helping people develop.

During the performance appraisals I've done, I’ve often spoken to a number of members of staff, and talked about how we enter our career in our early 20s, and exit in our late 60s. We’re not going to just “develop a level of skill” and stay static. Every year will involve some level of growth and learning, either in a technology area, people skills area or just about a project area we’re working on. We’re not ever going to be able to just “coast” knowing what we do now from year to year.

Collecting viewpoints for a 360 review

A 360 review involves taking feedback for a staff member for a number of peers within our wider team (yes – not just testers). The form asks questions about this peers view of the member of staffs competency across a number of areas.

I’ve tailored our 360 form to touch series of diverse competencies which knitted together form the “tester skillset”. It’s tough – the questions have to be diverse enough to cover a diverse range of topics (it's 360 because it allows you to see the whole picture after all), but not too many questions that people feel bored and don’t want to participate in giving feedback.

I try to set the tone for the feedback with the following upfront statement,

I think for a core questions for asking for feedback on a tester covers these areas,

I only expect one answer to each core question, but I provide some sub-questions to stimulate a response from the person giving feedback.

What is their work ethics and drive to succeed like?

Areas to think about,

How well do they communicate?

Areas to think about

How they give feedback (including defects)?

How good they are at problem identification and taking the initiative?

Areas to think about,

What is their technical ability like?

Areas to think about,

What is their understanding of the core business like?

What areas of growth do you see for this person?

Areas to think about,

Of course, no tester is really expected to excel at all of this – some will be better at business understanding than technical understanding or visa versa for instance. This isn’t really for measuring a tester, it’s for showing them where they’re doing well, and where they might want to think about whether there are some goals they’d like to do, either to address things they’re not happy about, or to extend themselves in an area they enjoy, but “take it to the next level”.

I collate all the comments, try to keep them as anonymous as I can, and add my own commentary where possible when I think there’s something additional that needs to be added (which I make clear as being from me). It’s important to mention here that everything needs to be done confidentially. Hence I keep all the comments in my private drive, and even book a meeting room to go through them in private on my laptop. People deserve that these notes aren’t available for all to see if you get called away from your desk!

For most people, this is going to be really simple, but for a few people, there may be issues highlighted through the feedback which may need investigation, or prior thought before giving. If I feel out of my depth, I know that I need to talk with someone in higher management or HR about how to go about handling if I’m expect issues.

Giving the results of the feedback to the individual

With most people, there is an unspoken elephant in the room that the 360 review feeds into a pay review. This makes people tense for obvious reasons.

I can’t speak for your pay scheme, but for myself, it makes sense to put the 360 review separate from the performance appraisal. I let the individual know that the 360 review feeds into the performance appraisal, but it’s a part, not the whole thing. Ideally the two things need to be spaced out, to allow for some reflection between them.

Giving the 360 review needs privacy and confidentiality (again meeting room, if possible far away from the team). I need the individual I’m doing the review with to feel relaxed, have an open mind, and to not feel on the defensive. I book at least an hour, I’m not just going to go through the feedback but give them an opportunity to discuss how they feel.

Before I go through the appraisal I reiterate the goals of this exercise, to give them feedback about their strengths, and to investigate areas they want to grow with, to feed back into our mentoring sessions. This helps put them at ease beforehand . I then go through the responses an area at a time. Discussing how they feel about the comments, and if they think they are fair. Give them time to explain anything they’re unhappy with. I try to be sympathetic if they feel it’s unfair, but try to offer details where I think they’re mistake. It’s important that you don’t let them convince you the whole world is wrong. If they’re agitated, I give them time to calm down. If they won’t, I have to consider terminating the meeting (again this will be really rare it gets to this).

That said, some feedback will be “unfair”. Sometimes an individual will be seen to be negligent but it is untrue. The problem isn’t the tester is negligent, but that people aren’t seeing a particular activity happening. For instance, I once had feedback that my testing was disorganised, and I never recorded what I did. That was actually untrue. But what was happening was I was not advertising enough where all that information was, and broadcasting enough when it got updated. I worked with a delivery manager to address that issue. It’s our job to try and determine what the truth of a situation is (wisdom of Solomon needed here). But again, this is why I need to do any groundwork on anything that’s likely to be contentious.

This feedback of course will create a roadmap of things the individual wants to develop. Sometimes it’s a roadmap of mentoring and upskilling (which we’ve covered in previous articles). Sometimes it’s about making something they do more visible to the rest of the team (without over-advertising). Generally it’s good to set 3-5 realistic goals for the year ahead for development (too many goals, and they’ll easily fall by the wayside). Goals that feed into the work environment ahead. I have talked about SMART goals in the past…

If someone has goals for a certain area, I make sure that they’re considered for any upcoming work that matches (but I try to avoid showing them undue favour). It’s important to try and play fair by their aspirations – being fair is incredibly important to me.

But if they have goals I just cannot match them to, it may be worth me asking how important those goals are and if they want to potentially transfer to another unit that can (even if only on a temporary basis). No-one can keep hold of talent whose heart lies in work that you can’t provide for them. If it’s that important to them, you can’t promise if you can’t deliver. People tend to respect being dealt with frankly and having these cards put on the table.

The 360 review process seems scary – especially with the rare “what if someone takes this badly” scenario – but it’s worth it. Never lose focus that this feedback is about being constructive. You are not doing this to make someone feel bad about themselves, but to genuinely help them to develop themselves. If the review you have prepared doesn’t feel like that, go back to the people who have provided you with information, and get them to expand.

It helps if you are having a relationship with this individual regularly - at a past company I only used to see the manager who did my 360s about 3 times a year, so there was that relationship. Also remember if you're giving information in the 360 which feels like a bolt-out-of-the-blue surprise, then something is fundamentally flawed with your department. Nothing should come as a huge surprise. Feedback should be happening throughout the year, this should just be collecting it up, with the odd omission, but again nothing that's going to shock.

360s are worth it because it gives us meaningful information as a "big picture" as to where our efforts are best applied in developing our testers to be the best they can be. It feeds back to the tester, and it feeds into our mentoring and one-on-one sessions to give them real goals.

Of course in that article, we’re talking more about ad-hoc feedback, and the importance of giving it throughout the year. A 360 feedback is a much more structured and formal way of giving feedback, which complements ad-hoc, and can be a framework for working with a staff member to help develop them through mentoring.

Our jobs involve a lot of elements. What am I doing well? What could I do better? We can worry about these things, but without getting some form of feedback, we’re just guessing at these things. Maybe in some areas we are too harsh on ourselves, whilst in others we think we’re doing superb, when really we’re falling into a trap of overestimating our competence. Without some feedback, we’ll never know and worse still may be fretting about the wrong things.

It’s easy to see the process of feedback through review or appraisal as a negative and soul destroying piece of HR. And some companies do indeed wield it as such. But the process really needs to be a constructive one, which is focused on putting time and energy into helping people develop.

During the performance appraisals I've done, I’ve often spoken to a number of members of staff, and talked about how we enter our career in our early 20s, and exit in our late 60s. We’re not going to just “develop a level of skill” and stay static. Every year will involve some level of growth and learning, either in a technology area, people skills area or just about a project area we’re working on. We’re not ever going to be able to just “coast” knowing what we do now from year to year.

Collecting viewpoints for a 360 review

A 360 review involves taking feedback for a staff member for a number of peers within our wider team (yes – not just testers). The form asks questions about this peers view of the member of staffs competency across a number of areas.

I’ve tailored our 360 form to touch series of diverse competencies which knitted together form the “tester skillset”. It’s tough – the questions have to be diverse enough to cover a diverse range of topics (it's 360 because it allows you to see the whole picture after all), but not too many questions that people feel bored and don’t want to participate in giving feedback.

I try to set the tone for the feedback with the following upfront statement,

Thank you for your commitment to provide feedback for this software tester. The process of feedback allows team members to find out areas in which they’re doing well, together with identifying areas in which they can develop and set goals for the coming year.

In providing this feedback, you’re helping to invest and develop another’s career. Although comments made here will be treated anonymously, we strongly recommend you attempt to give anything important directly to the person being reviewed – whether that feedback is around areas of success or suggestions for growth.

I think for a core questions for asking for feedback on a tester covers these areas,

- What is their work ethics and drive to succeed like?

- How well do they communicate?

- How they give feedback (including defects)

- How good they are at problem identification and taking the initiative?

- What is their technical ability like?

- What is their understanding of the core business like?

- What areas of growth do you see for this person?

I only expect one answer to each core question, but I provide some sub-questions to stimulate a response from the person giving feedback.

What is their work ethics and drive to succeed like?

Areas to think about,

- How do they approach their work?

- Would you describe them as a processional?

- Do they have passion to succeed?

- Do they seem to enjoy their work?

- Do they champion the company values?

How well do they communicate?

Areas to think about

- Do they express their viewpoint well?

- Do you feel your position is respected?

- Do they form positive relationships in our team and with the customer?

- Do you feel you’re working in the same team as this tester, or against them?

How they give feedback (including defects)?

As testers the main way we add value is the feedback we give on software, often in the form of defects. Being able to give effective and appropriate feedback, whether on software or a document is thus a key tester skill.

Areas to think about,- How does this tester approach you with problems?

- Do you feel comfortable asking this tester for feedback on yourself or a piece of your work?

- Do you feel they raise the right level of problems? Too many? Do they miss items?

How good they are at problem identification and taking the initiative?

Areas to think about,

- When given a new piece of work, or encountering a defect, have you observed this testers approach to identifying and solving problems?

- What could be improved inn their approach? What has worked well?

- When a serious problem has occurred, have you witnessed this tester using their initiative to make decisions and attempt to get resolutions? How effective were they?

What is their technical ability like?

Areas to think about,

- Are they competent in any tools they need to use?

- What are the areas of technical strength for the person?

- Have they displayed having an understanding of how applications are intended to work?

- Have they shown an understanding of the suite of applications we develop in our group?

- Are they able to work beyond just requirements (when required) to understand how the application should work?

- Are you aware of any areas of opportunity in which the tester can further develop their technical ability?

What is their understanding of the core business like?

As an IT business, we’re not just producing software, but business solutions. How well does this tester demonstrate an understanding of the important values and factors of this business?

Areas to think about,- How has this tester shown understanding of the end users and customers of the system under test?

- Does this tester show understanding of the business solution we’re trying to achieve in our software?

- How has this tester shown understanding of the target market we’re developing software for?

What areas of growth do you see for this person?

Areas to think about,

- What should this person do to help improve what they contribute to their team?

- As you have observed them, how does their ambition compare with their abilities at present?

- What things would help their growth in their current role?

- What areas of challenge should they seek more of that are out of their current comfort zone?

Of course, no tester is really expected to excel at all of this – some will be better at business understanding than technical understanding or visa versa for instance. This isn’t really for measuring a tester, it’s for showing them where they’re doing well, and where they might want to think about whether there are some goals they’d like to do, either to address things they’re not happy about, or to extend themselves in an area they enjoy, but “take it to the next level”.

I collate all the comments, try to keep them as anonymous as I can, and add my own commentary where possible when I think there’s something additional that needs to be added (which I make clear as being from me). It’s important to mention here that everything needs to be done confidentially. Hence I keep all the comments in my private drive, and even book a meeting room to go through them in private on my laptop. People deserve that these notes aren’t available for all to see if you get called away from your desk!

For most people, this is going to be really simple, but for a few people, there may be issues highlighted through the feedback which may need investigation, or prior thought before giving. If I feel out of my depth, I know that I need to talk with someone in higher management or HR about how to go about handling if I’m expect issues.

Giving the results of the feedback to the individual

With most people, there is an unspoken elephant in the room that the 360 review feeds into a pay review. This makes people tense for obvious reasons.

I can’t speak for your pay scheme, but for myself, it makes sense to put the 360 review separate from the performance appraisal. I let the individual know that the 360 review feeds into the performance appraisal, but it’s a part, not the whole thing. Ideally the two things need to be spaced out, to allow for some reflection between them.

Giving the 360 review needs privacy and confidentiality (again meeting room, if possible far away from the team). I need the individual I’m doing the review with to feel relaxed, have an open mind, and to not feel on the defensive. I book at least an hour, I’m not just going to go through the feedback but give them an opportunity to discuss how they feel.

Before I go through the appraisal I reiterate the goals of this exercise, to give them feedback about their strengths, and to investigate areas they want to grow with, to feed back into our mentoring sessions. This helps put them at ease beforehand . I then go through the responses an area at a time. Discussing how they feel about the comments, and if they think they are fair. Give them time to explain anything they’re unhappy with. I try to be sympathetic if they feel it’s unfair, but try to offer details where I think they’re mistake. It’s important that you don’t let them convince you the whole world is wrong. If they’re agitated, I give them time to calm down. If they won’t, I have to consider terminating the meeting (again this will be really rare it gets to this).

That said, some feedback will be “unfair”. Sometimes an individual will be seen to be negligent but it is untrue. The problem isn’t the tester is negligent, but that people aren’t seeing a particular activity happening. For instance, I once had feedback that my testing was disorganised, and I never recorded what I did. That was actually untrue. But what was happening was I was not advertising enough where all that information was, and broadcasting enough when it got updated. I worked with a delivery manager to address that issue. It’s our job to try and determine what the truth of a situation is (wisdom of Solomon needed here). But again, this is why I need to do any groundwork on anything that’s likely to be contentious.

This feedback of course will create a roadmap of things the individual wants to develop. Sometimes it’s a roadmap of mentoring and upskilling (which we’ve covered in previous articles). Sometimes it’s about making something they do more visible to the rest of the team (without over-advertising). Generally it’s good to set 3-5 realistic goals for the year ahead for development (too many goals, and they’ll easily fall by the wayside). Goals that feed into the work environment ahead. I have talked about SMART goals in the past…

If someone has goals for a certain area, I make sure that they’re considered for any upcoming work that matches (but I try to avoid showing them undue favour). It’s important to try and play fair by their aspirations – being fair is incredibly important to me.

But if they have goals I just cannot match them to, it may be worth me asking how important those goals are and if they want to potentially transfer to another unit that can (even if only on a temporary basis). No-one can keep hold of talent whose heart lies in work that you can’t provide for them. If it’s that important to them, you can’t promise if you can’t deliver. People tend to respect being dealt with frankly and having these cards put on the table.

The 360 review process seems scary – especially with the rare “what if someone takes this badly” scenario – but it’s worth it. Never lose focus that this feedback is about being constructive. You are not doing this to make someone feel bad about themselves, but to genuinely help them to develop themselves. If the review you have prepared doesn’t feel like that, go back to the people who have provided you with information, and get them to expand.

It helps if you are having a relationship with this individual regularly - at a past company I only used to see the manager who did my 360s about 3 times a year, so there was that relationship. Also remember if you're giving information in the 360 which feels like a bolt-out-of-the-blue surprise, then something is fundamentally flawed with your department. Nothing should come as a huge surprise. Feedback should be happening throughout the year, this should just be collecting it up, with the odd omission, but again nothing that's going to shock.

360s are worth it because it gives us meaningful information as a "big picture" as to where our efforts are best applied in developing our testers to be the best they can be. It feeds back to the tester, and it feeds into our mentoring and one-on-one sessions to give them real goals.

Saturday, August 10, 2013

Insecurity 103 - Developing through mentoring

In the last couple of articles, we've looked at how uncertainty and insecurity are part of being human, and how feedback can help us explore how we are really doing. Feedback is a powerful tool to allow us to understand our strengths, and identify areas for growth. But to really develop we need access to people who will coach and mentor us. It means we need to seek out mentors, but also that we need to learn to mentor the more junior staff we work with ...

When my sister-in-law Emily was over in May, I managed to take her to a Body Jam dance class. It was quite an experience for her, as she’s a trained ballet teacher, and found the format of an “aerobic dance class” interesting. On the way back we were talking about Kara and Brandon, two of my friends who are training to become Body Jam instructors themselves, and the journey they’re going through.

Both of them have always been noticeable in class as they having perfect technique, and successfully auditioned in May 2012 for several potential instructor slots. However , passing the audition was just the start of their journey. You don’t get given an MP3 player and some groovy songs, and just thrown in front of a room full of people who want to do a dance class - that would be a great way to burn through instructors!

Taking that approach, some instructors would do well, but many would flounder, and end up leaving. Meanwhile you’d have an audience of customers who’d be so turned off from the not-so-good instructors, they’d have voted with their feet and found a local Zumba class instead (Zumba is “the competition” for Body Jam, so that’s really not good).

Such a method would be an almost Darwinian “survival of the fittest” dance-off for instructors, who would have to sink or swim under their own power. Like Flashdance meets the Hunger Games.

Thankfully Les Mills Body Jam is NOTHING like that. Being “accepted” through auditions means you are put into a program which will eventually create an instructor. But this program focuses on coaching and mentoring to develop world-class instructors.

For the Juvie Jammer such as Kara or Brandon, they’ve shown they’re capable as a participant, but now it's time for the next step. But they’re put into weekend training sessions with fellow instructors, where they’re all put through their paces on dance moves, especially for upcoming releases of classes. [Body Jam classes have a very tight set of choreography for each “release” which have to be learned – it allows Les Mills customers to attend any Body Jam class in the world, and know they’ll be doing the same release]

The Juvie Jammer, has to show they know the routines and can execute the moves in a workshop environment. Then comes the first test of nerves, “shadowing”. They’re allowed on stage in a class with an experienced instructor. They are silent throughout, basically acting as a “shadow” copy of the lead instructors moves. It gives the Juvie Jammer, their first taste of facing a room full of eyes watching them intently, their first case of stage nerves and trying to remember everything from their when put on the spot. The instructor then gives the Juvie all important feedback and tuition after the class to help them develop - what went well, what didn't, things they can think about doing next time.

This will go on over a few months, and eventually they will be allowed to “lead a track”. This is much like “shadowing”, but for a period of about 5-10 minutes in a class, the experienced instructor and the Juvie Jammer swap places - the Juvie leads and gives instructions, whilst the experienced instructor is the silent shadow. This goes on over several month until the Juvie is leading for half of a class (about 30 minutes), all the time with the mentoring from the experienced instructor helping with their technique, nerves, what to say what to do.

It’s only at this point – at least 6 months later, that the Juvie is really let loose on stage as an instructor, although even then, they might co-host classes for a while, all the time getting that instruction and direction afterwards. Before too long, they’ll end up on stage “solo”, and they’ve graduated!

But even at this point, the coaching doesn’t stop. The Head Teacher for Body Jam will observe a class, and film them four times a year, and make notes during a class. This will then turn into a one-on-one meeting, where feedback of what’s working or not is given.

Furthermore, as an attendee of a class, you’re encouraged to give feedback directly to instructors. If you enjoy classes, and want to build a relationship with instructors, this is well worth doing. Even if sometimes you have suggestions in that grey minefield of “maybe it would be better if …”. But at the end of the day, they prefer that over you staying silent, stop attending, only to be found "gone rogue" in a Zumba class some time later.

So far this might be interesting and whimsical to an audience of software testers. But the point to this piece is that this process described isn’t just the way you train and develop someone to be a Body Jam instructor. It’s the way you can coach anyone to do any role you can imagine. The framework applies anywhere.

Give them mentoring, give them some room to prove themselves and try tasks and jobs outside their comfort zone. However, an experienced guru, give them feedback and consult with them about what they're doing that works and what doesn’t. But try to avoid moulding them into a copy of you, if they try something and it works, credit them. Even (and especially) if it’s different to what you would have done. Allow them to try things, and take “next steps”, but monitor and review as they do, until they show they’ve mastered. Then find the next step. This way they learn by doing, but it means more to them than you just showing, because the experiences are their own.

During KWST3, I gave an experience report on developing and training testers was around a similar method of mentoring through one-on-ones, and giving people new challenges, whilst monitoring to reduce any risk. However, my talk I focused more on “the teaching of science” as it's core model.

This turned out to be quite relevant, as the following experience report by Erin Donnell about how she worked as a data analyst, only to be told one day that she and her colleague Jen had been arbitrarily press-ganged into testing with no prior background. "So get testing". There was no existing test team or support … it was sink or swim time. However she managed to find mentors and coaches outside of her organisation in Ilari Aegerter, Aaron Hodder and James Bach. People who helped to guide her, whilst at the same time she herself took charge, taking huge leaps of initiative to learn, seeking out BBST courses.

One issue about mentoring that Aaron mentioned during my experience report was whether it would cause “a cloning of software testers with the same mentality and approach, and potentially stifle fresh blood in the industry”. It’s a good point, but goes back to what I said about if someone does something and it works, tell them they did good, even (and especially) if it’s different to how you do it. This is what’s so exciting about doing mentoring – that once in a while the person you mentor will end up opening your eyes, and you’ll see something you think you know too well in a different light!

In the Body Jam world, instructors teach the same class, but they have vastly different styles, and this is really encouraged. In fact they are urged to try to avoid copying other instructors styles, but to seek out "their own autheticity", because it's about instructors "being themselves". This is why in the Wellington region you have personalities like,

Within the testing world, the same is true. New blood, new ideas, new initiative is vital to help nurture and develop for the good of our industry. We need a next generation of testers who won't just copy and paste the way we test, but who will know to change it and modify it to suit their needs.

We don't need the next James Bach, Lisa Crispin or even Jerry Weinberg. We need the first Aaron Hodder, Erin Donnell, Jari Laakso ...

[Oh if you want to know what Body Jam looks like ... not my Wellington crew, but pretty cool video never-the-less ...]

When my sister-in-law Emily was over in May, I managed to take her to a Body Jam dance class. It was quite an experience for her, as she’s a trained ballet teacher, and found the format of an “aerobic dance class” interesting. On the way back we were talking about Kara and Brandon, two of my friends who are training to become Body Jam instructors themselves, and the journey they’re going through.

Both of them have always been noticeable in class as they having perfect technique, and successfully auditioned in May 2012 for several potential instructor slots. However , passing the audition was just the start of their journey. You don’t get given an MP3 player and some groovy songs, and just thrown in front of a room full of people who want to do a dance class - that would be a great way to burn through instructors!

Taking that approach, some instructors would do well, but many would flounder, and end up leaving. Meanwhile you’d have an audience of customers who’d be so turned off from the not-so-good instructors, they’d have voted with their feet and found a local Zumba class instead (Zumba is “the competition” for Body Jam, so that’s really not good).

Such a method would be an almost Darwinian “survival of the fittest” dance-off for instructors, who would have to sink or swim under their own power. Like Flashdance meets the Hunger Games.

Thankfully Les Mills Body Jam is NOTHING like that. Being “accepted” through auditions means you are put into a program which will eventually create an instructor. But this program focuses on coaching and mentoring to develop world-class instructors.

For the Juvie Jammer such as Kara or Brandon, they’ve shown they’re capable as a participant, but now it's time for the next step. But they’re put into weekend training sessions with fellow instructors, where they’re all put through their paces on dance moves, especially for upcoming releases of classes. [Body Jam classes have a very tight set of choreography for each “release” which have to be learned – it allows Les Mills customers to attend any Body Jam class in the world, and know they’ll be doing the same release]

The Juvie Jammer, has to show they know the routines and can execute the moves in a workshop environment. Then comes the first test of nerves, “shadowing”. They’re allowed on stage in a class with an experienced instructor. They are silent throughout, basically acting as a “shadow” copy of the lead instructors moves. It gives the Juvie Jammer, their first taste of facing a room full of eyes watching them intently, their first case of stage nerves and trying to remember everything from their when put on the spot. The instructor then gives the Juvie all important feedback and tuition after the class to help them develop - what went well, what didn't, things they can think about doing next time.

This will go on over a few months, and eventually they will be allowed to “lead a track”. This is much like “shadowing”, but for a period of about 5-10 minutes in a class, the experienced instructor and the Juvie Jammer swap places - the Juvie leads and gives instructions, whilst the experienced instructor is the silent shadow. This goes on over several month until the Juvie is leading for half of a class (about 30 minutes), all the time with the mentoring from the experienced instructor helping with their technique, nerves, what to say what to do.

It’s only at this point – at least 6 months later, that the Juvie is really let loose on stage as an instructor, although even then, they might co-host classes for a while, all the time getting that instruction and direction afterwards. Before too long, they’ll end up on stage “solo”, and they’ve graduated!

But even at this point, the coaching doesn’t stop. The Head Teacher for Body Jam will observe a class, and film them four times a year, and make notes during a class. This will then turn into a one-on-one meeting, where feedback of what’s working or not is given.

Furthermore, as an attendee of a class, you’re encouraged to give feedback directly to instructors. If you enjoy classes, and want to build a relationship with instructors, this is well worth doing. Even if sometimes you have suggestions in that grey minefield of “maybe it would be better if …”. But at the end of the day, they prefer that over you staying silent, stop attending, only to be found "gone rogue" in a Zumba class some time later.

So far this might be interesting and whimsical to an audience of software testers. But the point to this piece is that this process described isn’t just the way you train and develop someone to be a Body Jam instructor. It’s the way you can coach anyone to do any role you can imagine. The framework applies anywhere.

Give them mentoring, give them some room to prove themselves and try tasks and jobs outside their comfort zone. However, an experienced guru, give them feedback and consult with them about what they're doing that works and what doesn’t. But try to avoid moulding them into a copy of you, if they try something and it works, credit them. Even (and especially) if it’s different to what you would have done. Allow them to try things, and take “next steps”, but monitor and review as they do, until they show they’ve mastered. Then find the next step. This way they learn by doing, but it means more to them than you just showing, because the experiences are their own.

During KWST3, I gave an experience report on developing and training testers was around a similar method of mentoring through one-on-ones, and giving people new challenges, whilst monitoring to reduce any risk. However, my talk I focused more on “the teaching of science” as it's core model.

This turned out to be quite relevant, as the following experience report by Erin Donnell about how she worked as a data analyst, only to be told one day that she and her colleague Jen had been arbitrarily press-ganged into testing with no prior background. "So get testing". There was no existing test team or support … it was sink or swim time. However she managed to find mentors and coaches outside of her organisation in Ilari Aegerter, Aaron Hodder and James Bach. People who helped to guide her, whilst at the same time she herself took charge, taking huge leaps of initiative to learn, seeking out BBST courses.

One issue about mentoring that Aaron mentioned during my experience report was whether it would cause “a cloning of software testers with the same mentality and approach, and potentially stifle fresh blood in the industry”. It’s a good point, but goes back to what I said about if someone does something and it works, tell them they did good, even (and especially) if it’s different to how you do it. This is what’s so exciting about doing mentoring – that once in a while the person you mentor will end up opening your eyes, and you’ll see something you think you know too well in a different light!

In the Body Jam world, instructors teach the same class, but they have vastly different styles, and this is really encouraged. In fact they are urged to try to avoid copying other instructors styles, but to seek out "their own autheticity", because it's about instructors "being themselves". This is why in the Wellington region you have personalities like,

- Mereana (the head teacher), who very much like your bubbly best friend

- Pete, who is the reserved but focused Mr Miyagi of dance, with a very dry wit

- Lil Jess and Rhodene who together specialise in hip-hop gangster dance style, with classes are full of attitude with a capital A.

- Cade who is what High School Musical would be like if done by David Lynch. He is actually one of the chorus line in a number of musicals that run in Wellington.

- Nic who is like a Jazz-dancing kids TV presenter

Within the testing world, the same is true. New blood, new ideas, new initiative is vital to help nurture and develop for the good of our industry. We need a next generation of testers who won't just copy and paste the way we test, but who will know to change it and modify it to suit their needs.

We don't need the next James Bach, Lisa Crispin or even Jerry Weinberg. We need the first Aaron Hodder, Erin Donnell, Jari Laakso ...

[Oh if you want to know what Body Jam looks like ... not my Wellington crew, but pretty cool video never-the-less ...]

Wednesday, August 7, 2013

Insecurity 102 - Are you getting feedback?

In my previous piece, I talked about how insecurity can blight us all, and how peer networking and feedback can be a useful tool to combat it. Here I'm going to expand this a lot more and explore about getting feedback, how to do it and how it helps.

Feedback is vital for everyone, it lets us know what we do well, and lets us know areas where we need development. It helps keep us rounded individuals neither insecure or overconfident.

However, it’s something we shy away from, or worst still feel that feedback “should only point out flaws”. As testers our focus is really to give individuals “feedback on a piece of software”, and indeed, that mainly means “defects”. But we’re in danger there of giving way to the critics syndrome of Waldorf and Statler, pointing out flaws from the safety of a box.

But in my opinion, feedback should always be about being positive. Some people will roll their eyes and go “so we can only say nice things then … political correctness gone mad!”. But that’s not what I mean. Feedback can say what someone does well, but it can also say when someone is weak in an area. But here’s the tricky thing, and why many of us shy away from dealing with this side … if someone is weak in an area, you need to give them the feedback in such a way as to “light the way” so they can make decisions about addressing it. Such feedback needs to be sensitive and about helping the individual to develop, not about destroying them.

And that’s hard to do. It’s all easy for it to be needlessly brutal. Imagine your best friend wants to go on X-Factor and sing in front of the judges. The only problem is, you know that their singing stinks. Are you going to give them feedback for this, or let them go in front of the judges and be torn to pieces?

I’m a regular at Les Mills gyms in Wellington, and do a lot of dance classes, called Body Jam. I was asked by the Head Instructor for some feedback on her classes at the weekend, and my response was,

This led into a conversation with Mereana, where I said that although we were talking about this now, I really had hoped over the last few years I had given her that feedback in bits and pieces, and none of it came as a huge surprise. I know I’d spoken to her about how much she’d moved from “following a choreography script” to elegantly and confidently “living the values” of the program, and give something personal and unique to her instruction. You see her teach today, and you know there's good reason why she's currently the head teacher for the program.

Back at my office, however, it’s appraisal time within my team, and I’m busy getting 360 reviews complete for them. But the same theme is still there with my staff as it is with Mereana. It’s too late when someone asks for feedback after 12+ months to really be giving anything that surprises. We need to be giving feedback at work to our peers regularly, and the appraisal needs to be a “collection of all that’s been said over the year".

Feedback need not be frightening. Poor old Phil Cool from my last article, gets feedback from everyone with a voice or a pen - it might be me saying he’s good, it might be a critic with a secret axe to grind, it might be a drunk heckler who thinks he's funnier.

We are lucky in our office environment that we have more intimate relationships with the people who can supply our feedback. And if we don’t feel some trust to someone we work with for feedback, then maybe we have to ask ourselves if we’re in the right team. Trust in (the majority) of those we work with is vital, but there is always going to be some conflict.

If you want to explore more the idea of giving feedback, I cannot recommend enough Johanna Rothman's book “Behind Closed Doors” which is very much about the process of one-on-one meetings, giving this kind of feedback and coaching to help develop staff, and take them further.

Feedback is vital for everyone, it lets us know what we do well, and lets us know areas where we need development. It helps keep us rounded individuals neither insecure or overconfident.

However, it’s something we shy away from, or worst still feel that feedback “should only point out flaws”. As testers our focus is really to give individuals “feedback on a piece of software”, and indeed, that mainly means “defects”. But we’re in danger there of giving way to the critics syndrome of Waldorf and Statler, pointing out flaws from the safety of a box.

But in my opinion, feedback should always be about being positive. Some people will roll their eyes and go “so we can only say nice things then … political correctness gone mad!”. But that’s not what I mean. Feedback can say what someone does well, but it can also say when someone is weak in an area. But here’s the tricky thing, and why many of us shy away from dealing with this side … if someone is weak in an area, you need to give them the feedback in such a way as to “light the way” so they can make decisions about addressing it. Such feedback needs to be sensitive and about helping the individual to develop, not about destroying them.

And that’s hard to do. It’s all easy for it to be needlessly brutal. Imagine your best friend wants to go on X-Factor and sing in front of the judges. The only problem is, you know that their singing stinks. Are you going to give them feedback for this, or let them go in front of the judges and be torn to pieces?

I’m a regular at Les Mills gyms in Wellington, and do a lot of dance classes, called Body Jam. I was asked by the Head Instructor for some feedback on her classes at the weekend, and my response was,

I have been going to Mereana's classes for about 3 years. In that time I've seen her develop and mature her instructor style considerably.

Mereana is very positive, engaging with the class in a distinctive, bubbly manner. She is very strong in giving clear direction, and is good at giving adaptive instruction to the audience when she sees people have injury / struggling with choreography. As such I really feel everyone feels both engaged and pushed from her classes.

This led into a conversation with Mereana, where I said that although we were talking about this now, I really had hoped over the last few years I had given her that feedback in bits and pieces, and none of it came as a huge surprise. I know I’d spoken to her about how much she’d moved from “following a choreography script” to elegantly and confidently “living the values” of the program, and give something personal and unique to her instruction. You see her teach today, and you know there's good reason why she's currently the head teacher for the program.

Back at my office, however, it’s appraisal time within my team, and I’m busy getting 360 reviews complete for them. But the same theme is still there with my staff as it is with Mereana. It’s too late when someone asks for feedback after 12+ months to really be giving anything that surprises. We need to be giving feedback at work to our peers regularly, and the appraisal needs to be a “collection of all that’s been said over the year".

Feedback need not be frightening. Poor old Phil Cool from my last article, gets feedback from everyone with a voice or a pen - it might be me saying he’s good, it might be a critic with a secret axe to grind, it might be a drunk heckler who thinks he's funnier.

We are lucky in our office environment that we have more intimate relationships with the people who can supply our feedback. And if we don’t feel some trust to someone we work with for feedback, then maybe we have to ask ourselves if we’re in the right team. Trust in (the majority) of those we work with is vital, but there is always going to be some conflict.

If you want to explore more the idea of giving feedback, I cannot recommend enough Johanna Rothman's book “Behind Closed Doors” which is very much about the process of one-on-one meetings, giving this kind of feedback and coaching to help develop staff, and take them further.

Tuesday, August 6, 2013

Insecurity 101 - The Phil Cool Factor

As a kid growing up in England in the 80s, we all were entranced by comedian Phil Cool. He was a an impressionist with an amazing ability to contort his face into different shapes. I remember how excitedly we'd talk about the last nights show in the corridors of Abbot Beyne High School in between classes.

Here's a few examples of Phil at his best ...

You might have noticed this blog has a lot of comic tones, and maybe you think I'm making a joke when I say that I do actually take comedy really seriously. But I love comedy which is clever and unexpected, I despise comedy that is cheap. So you can imagine how much I was in awe when in 2009 I actually got to meet my comedic legend quite by accident at a Fairport Convention concert.

He was actually part of a folk support act that played before the main band. Some of his songs were typically comic, but some really very straight. At then end of the show I bumped into him whilst waiting for a friend.

What happened was a humbling eye opener for me. Phil Cool, quite literally the coolest comedian on the TV in my childhood - a man so very loud and funny on stage - but in person ... quite a shock. I got speaking to him, and said I'd really enjoyed his set, and what an interesting (but different) venture. Although really polite, be seemed quite nervous, and blushing said it was nice to hear that. The man before me wasn't a loud and bombastic comedian, but a humble, somewhat uncertain human being who struck me as doubtful of his own (immense in my opinion) talents.

Rather than shatter my perceptions of him, I came away fascinated that someone I looked up to was not the stuff of legend, but a flesh-and-blood human being not so different from myself.

You see we all have doubts. It's a very human thing to have internal fears and insecurities.

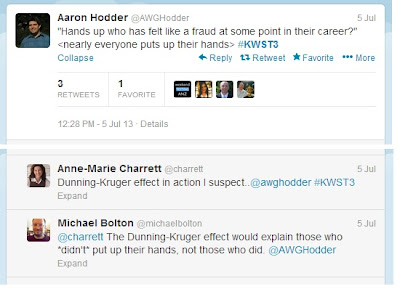

During KWST3, talking about the same cloud of doubt, there was a point where Aaron Hodder asked people to put up their hands to say if they ever felt a fraud as a tester ...

Most of the room put theirs up, but I have to admit I didn't put my hand up. Commentary from Michael Bolton suggested that was because of the Dunning-Kruger effect. This is the "grad phenomemon" where someone just out of University thinks they know everything, whilst someone with 20 years experience seems to show less superiority because they're more aware of their limitations. People who put their hand up were experienced testers who knew their limits, whilst those like myself who kept them down were "deluded newbies" who thought we knew it all.

However for myself, despite Michael's criticism, I've been in the industry, and in teaching and research science enough to know I am a tester. In my time, I've thought enough to get feedback and to ask around both my superiors and my peers, and it turns out that much like Phil Cool, insecurity is a common thing. We're often hiding it beneath bravado.

I never think of myself as a fraud as a tester, simply because I've had a couple of non-testing careers. I would not have spent 16+ years in the IT industry if I was plagued by doubts about me belonging here or having worth here. Simply put, life is too short, and I would have abandoned ship long ago.

But it doesn't come easy. I've get to know others, and be open, and sometimes talk about how I feel out of my comfort zone with peers that I trust. My wingman on RAF projects 10 years ago was John Preston, and we still exchange emails talking about problems and challenges we face in IT. Through both my career and through the internet, I've built up a wide peer network, many of them people I trust to ask big questions.

I've also looked up and followed some industry leaders in IT, and got to know a few. They can seem to tower over us as Titans, and we can look at our projects and go "I bet Heracles Tester doesn't have these problems". What has surprised me as I've got to know a few better, is that the Phil Cool effect takes hold - even in IT. People who seem superhuman figures, powerhouses who can get things done, the more you get to know them up close you realise they are not Testing Titans who have been empowered with mighty and mystical powers from the Gods Of Testing themselves. They are human beings, vulnerable and at times caught and ensnared in their own emotions. Just like you or me.

This revelation has not diminished them in my eyes - it moves them from being something iconic (but also people I can't relate to), to being more peer in nature. But at the same time, they become people I can relate to more, and who inspire me more in daily life. Because if they do not have to "skip the hard yards" because of their status, if they have to go through the same assault course, then maybe I can too.

I know being a "famous" tester doesn't mean the learning curve of a new project is any easier, it doesn't exempt you from the occasional tussle at work, having to explain your position as a tester or having to do the (occasional) extra hours to get a project launched.

With that knowledge I've gained through networking that there are no magic solutions or attributes, there was no purpose putting my hand up to feeling like a fraud. It serves no-one to be modest. And as I said, if I really felt like that, I would have jumped to a new profession by now if I genuinely did.

That said, I do have doubts and even insecurities. To steal the words of Douglas Adams, I'd like to call them "rigidly defined areas of doubt and uncertainty". I worry sometimes there are things when planning testing that I don't know, areas I'll not think to test enough, bad assumptions I'll make.

These doubts are not about me as an individual, but about whether I know or have thought about enough things about what I'm testing. But then again, isn't the first law of testing that the profession exists because people are fallible and make mistakes (in code or design). Given that human frailty why would testers be exempt?

The answer to that is just as we do with testing, just as I mentioned about personal insecurity. We put it out to a network and get feedback. We put it in front of people we trust (hopefully who you work for), and ask their opinion, critique and questions. Then the planned testing isn't a one-man-band, but the sum ideas of everyone on the team, some of whom might have different ideas to how things work to you.

Getting people to positively critique to make something better is a superb strategy for fighting neurosis and feeling more confident about what we do. At the end of the day, giving critique and feedback to produce a positive outcome pretty much sums up what we do as testers.

Subscribe to:

Comments (Atom)